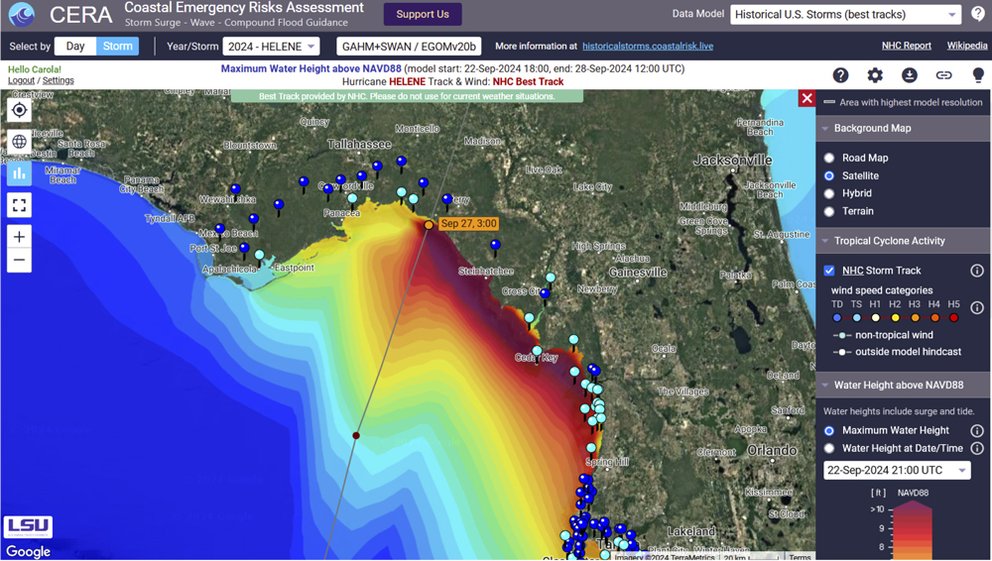

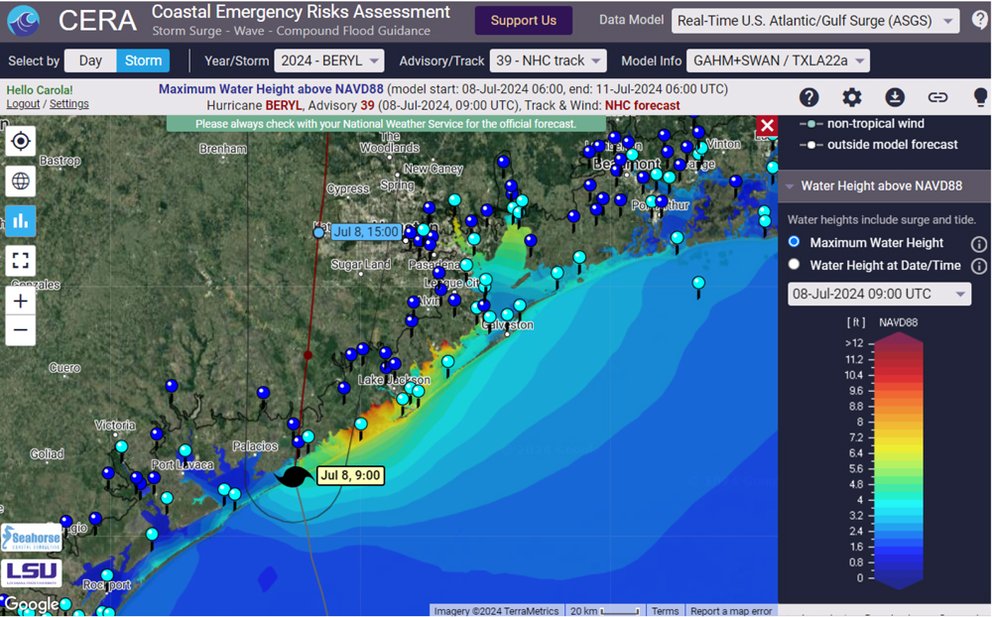

The 2024 Atlantic hurricane season left a trail of destruction in its wake, causing hundreds of fatalities and more than $200 billion dollars in damages. Despite the heavy toll, supercomputer simulations were an important tool for U.S. state and federal agencies in protecting life and property.

At the Texas Advanced Computing Center (TACC), Vista, Frontera, Stampede3, and Lonestar6 are used for urgent computing to meet the needs of emergency responders with rapid and frequently updated simulations of storm surge, the often deadly rise in sea level and coastal flooding from big storms and hurricanes.

“Here at TACC, we've continued many collaborations between Texas, Louisiana, North Carolina, other state agencies, and with research institutions to provide resources for running storm surge forecasting models,” said Carlos Del Castillo Negrete, a research associate in the High Performance Computing group of TACC.

Negrete, who joined TACC in 2023, was previously a researcher with the Computational Hydraulics Group (CHG) at the Oden Institute for Computational Engineering & Sciences. Under the leadership of Clint Dawson, Princpal Faculty at the Oden Institute, the CHG uses computational simulation to develop models and numerical methods used to improve the understanding of flooding and storm surges.

Through his decades-long research at the Oden Institute, Dawson has been a key developer of storm surge models tuned for running on supercomputers, mainly the Advanced Circulation (ADCIRC) model, originally developed by Rick Luettich of the University of North Carolina at Chapel Hill (UNC-Chapel Hill) and Joannes Westerink of the University of Notre Dame. Dawson is the Department Chair of Aerospace Engineering and Engineering Mechanics at the Cockrell School of Engineering at The University of Texas at Austin.